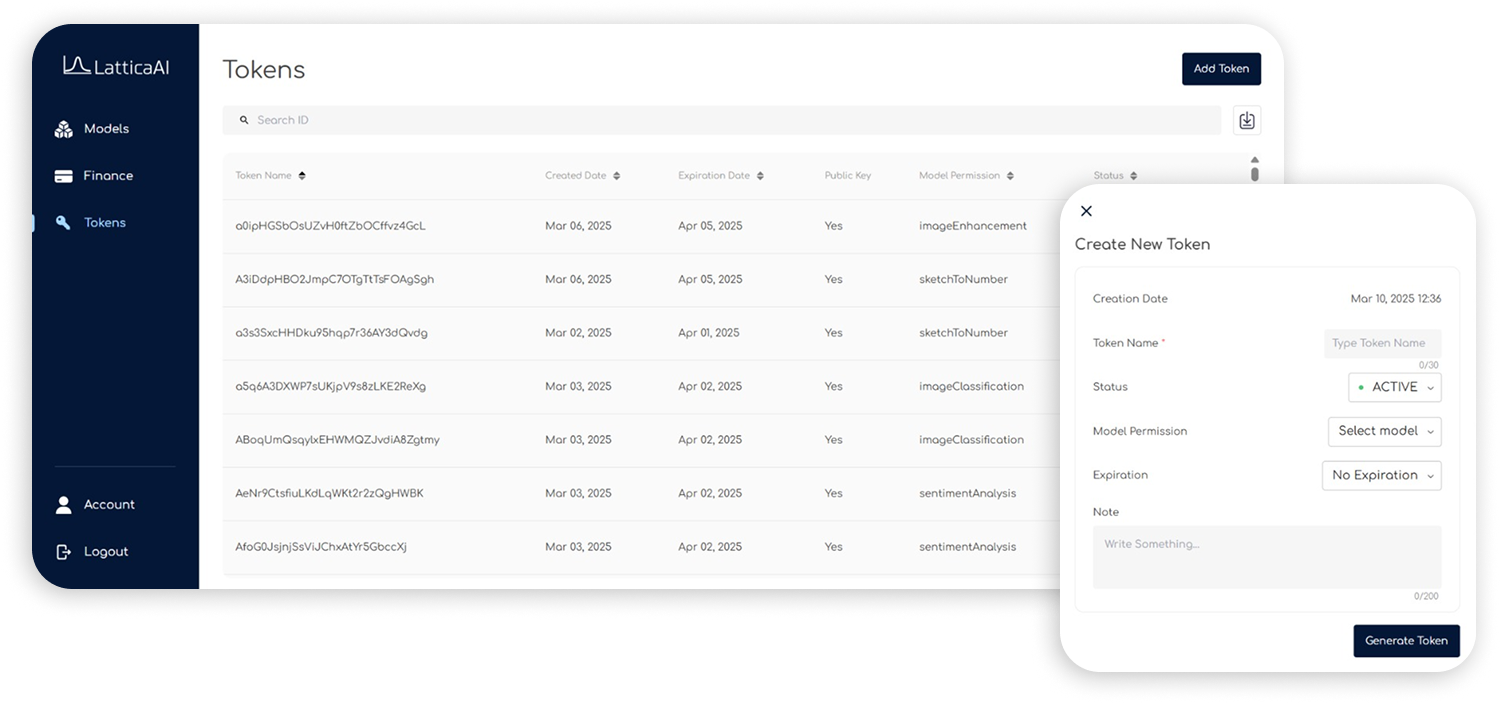

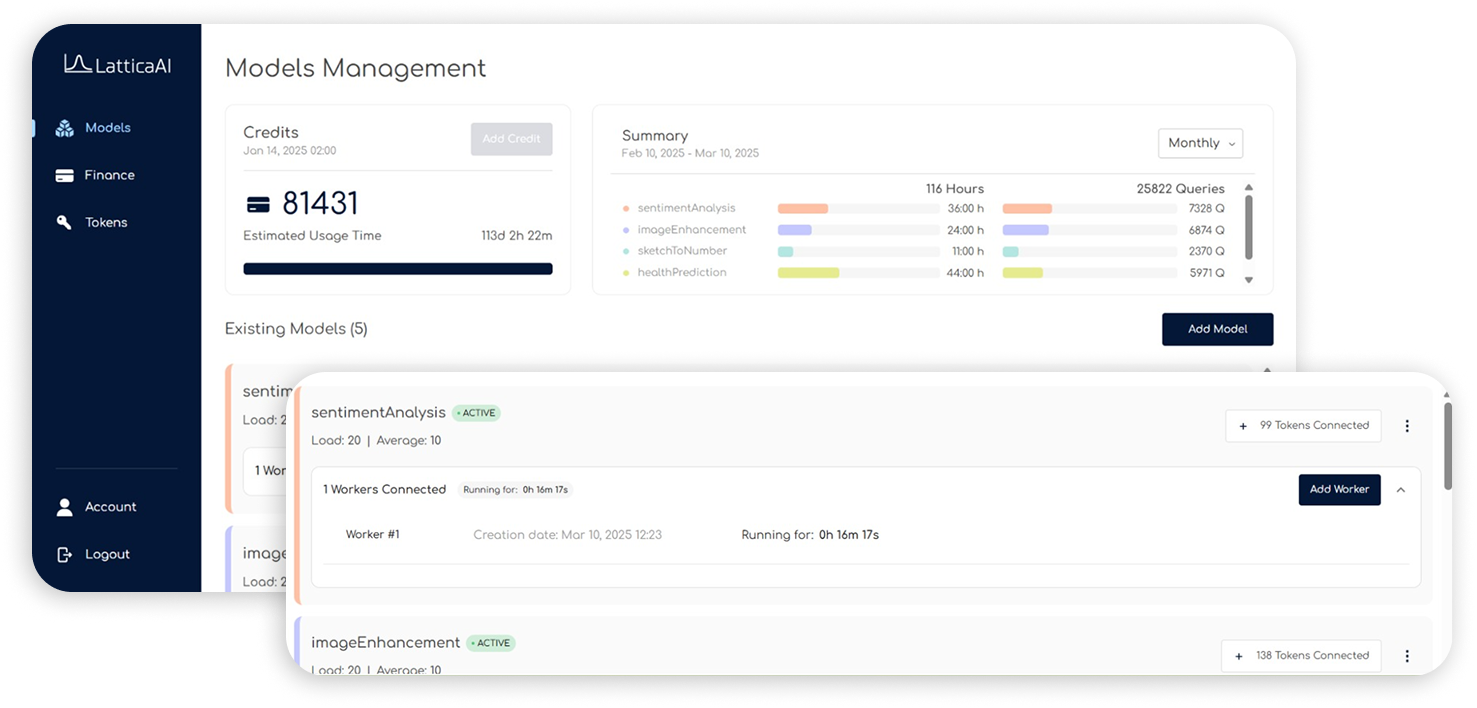

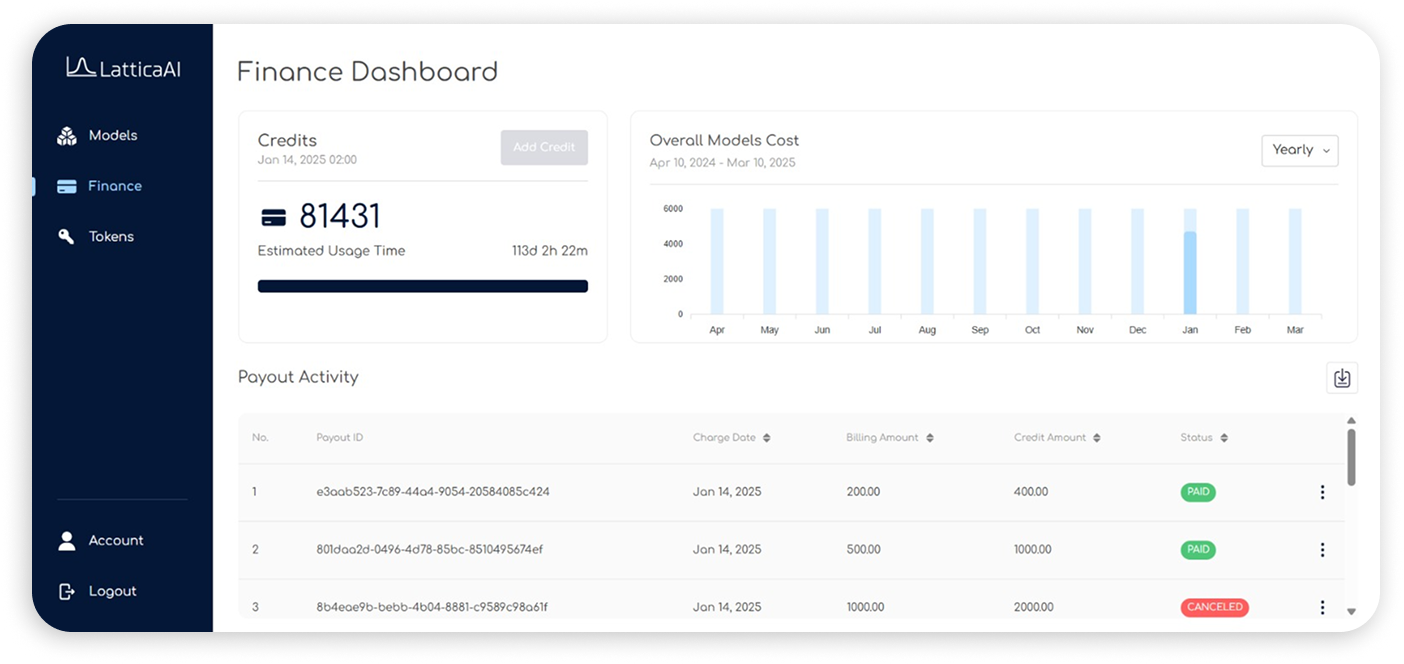

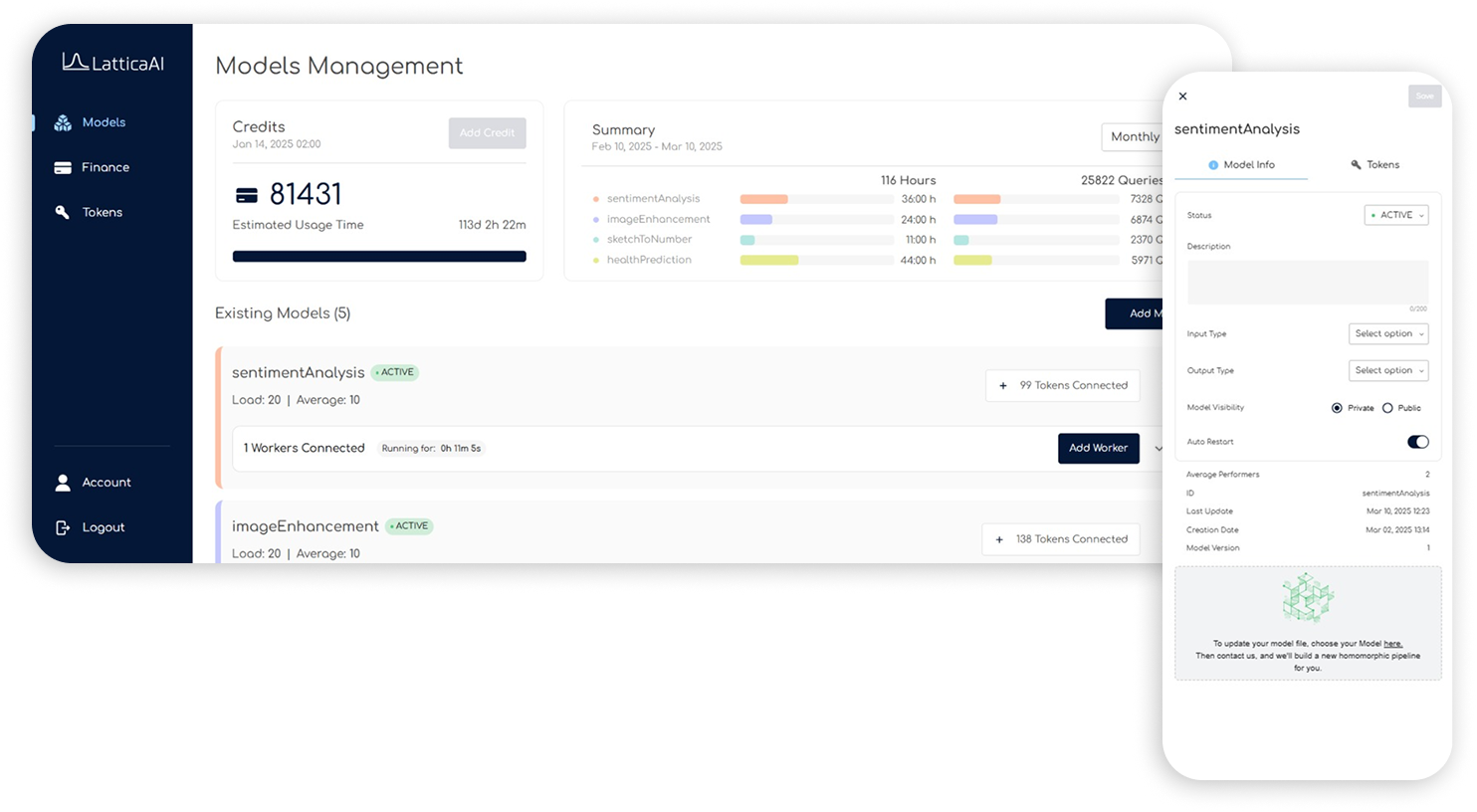

Lattica is a platform for AI providers to host models, manage access, and allocate compute resources. End users can run encrypted queries on these models without exposing their data.

Built on the Lattica FHE stack, our platform enables fully homomorphic encrypted inference, so AI providers never see user inputs, and users never expose their data.